Queen’s University’s Human Media Lab unveils world’s first interactive physical 3D graphics

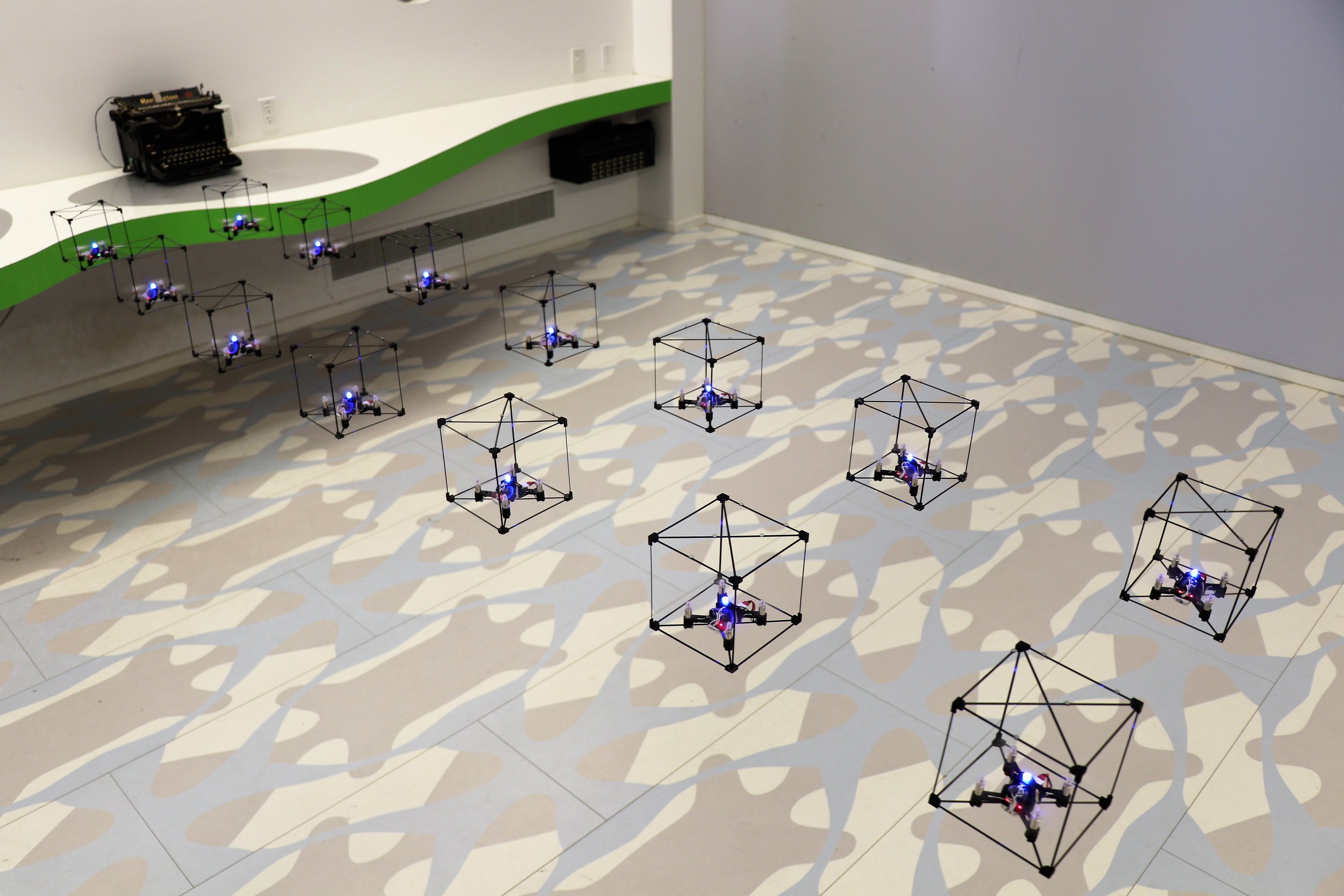

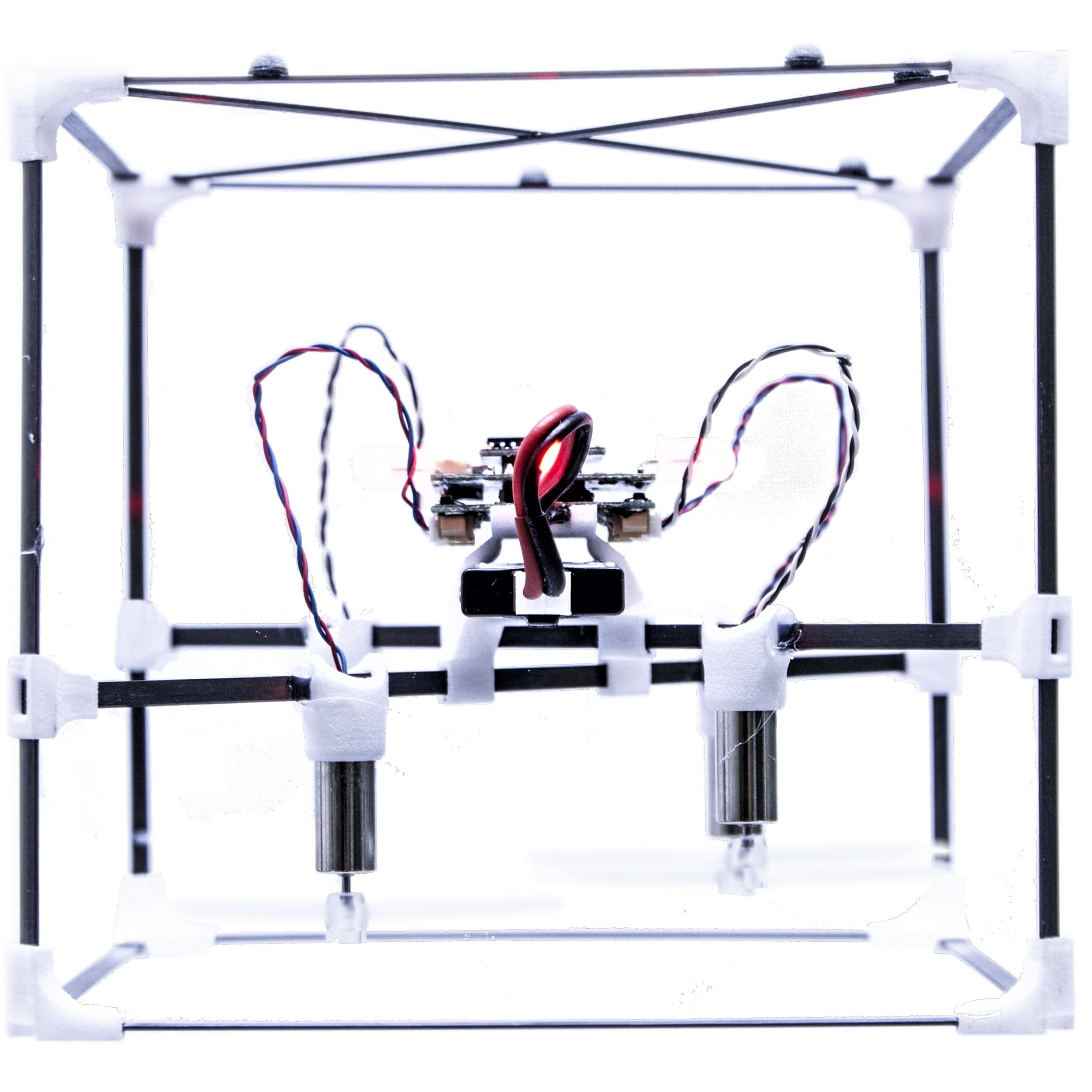

Berlin – October 15th. The Human Media Lab at Queen’s University in Canada will be unveiling GridDrones, a new kind of computer graphics system that allows users to physically sculpt in 3D with physical pixels. Unlike in Minecraft, every 3D pixel is real, and can be touched by the user. A successor to BitDrones and the Flying LEGO exhibit – which allowed children to control a 3D swarm of drone pixels using a remote control – GridDrones allows users to physically touch each drone, dragging them out of a two-dimensional grid to create physical 3D models. 3D pixels can be given a spatial relationship using a smartphone app. This tells them how far they need to move when a neighbouring drone is pulled upwards or downwards by the user. As the user pulls and pushes pixels up and down, they can sculpt terrains, architectural models, and even physical animations. The result is one of the first functional forms of programmable matter.

“Unlike 3D printing materials, GridDrones do not require structural support as each element self-levitates to overcome gravity. Unlike 3D prints, the system is bi-directional: you can change the “print” simply by picking up the pixels and re-arranging them”, says Roel Vertegaal, director of the Human Media Lab and professor of Human-Computer Interaction at Queen’s University in Kingston, Canada. “This is an important first step towards robotic systems that render graphics as physical reality, rather than as just light. This means users will have a fully immersive experience without a head-mounted display, one that provides haptics for free.”

While the system is still low-resolution, it is capable of real-time rendering of physical structures such as bridges (catenary arches), NURB surfaces, and 3D animations of flying birds. GridDrones consists of 15 nano quadcopters that maintain their position using a Vicon motion capture system, with millimeter accuracy. Corresponding software objects maintain where each drone needs to go, and proportional-integral-derivative loops ensure that they move there smoothly. This means the system can be programmed the same way as any computer graphics system, with the difference that GridDrones uses physical voxels rather than software polygons to render.

“As an example, imagine a designer creating an arched doorway,” says Dr. Vertegaal. “All they need to do is pull out a drone and sculpt a physical, life-sized doorway that you can immediately walk through. The system is particularly suited for physical animations and can be used as a design platform for drone exhibits. One of the unique aspects is that the drones are so small, they can fly indoors and are safe to touch. After a model is complete, it can be printed on a 3D printer. Another application would be a physical version of the popular voxel game Minecraft.”

The Human Media Lab has worked closely together with Prof. Tim Merritt from Aalborg University in Denmark to create the system. “Future versions of the system will feature billions of drones that are so small that they will be able to cling together to create physical structures that are not discernable from real prints,” says Prof. Merritt. “This technology has the potential to ultimately displace Virtual Reality. The real advantage is that it is situated in the user’s real reality. That’s why we call it a Real Reality Interface.”

Reference

Braley, S., Rubens, C., Merritt, T. and Vertegaal, R. 2018. GridDrones: A Self-Levitating Physical Voxel Lattice for Interactive 3D Surface Deformations. In Proceedings of UIST'18 Conference on User Interface Software and Technology, 10 pp. [PDF]

Media Footage

A rights-free video is available above and high-resolution photographs of the flying drones are available rights-free below. Feel free to copy to other platforms but please include a credit to Human Media Lab.

Media contact:

Dave Rideout

Communications Officer, Media Relations, Queen’s University

613-533-6000 ext. 79648

dave.rideout@queensu.ca

About the Human Media Lab at Queen’s University

The Human Media Lab (HML) at Queen’s University is one of Canada's premier Human-Computer Interaction (HCI) laboratories. Inventions include eye contact sensors, smart pause, attention-aware smartphones, PaperPhone, the world’s first flexible phone, PaperTab, the world’s first paper computer and TeleHuman2, the world’s first holographic teleconferencing system. HML is directed by Dr. Roel Vertegaal, Professor of HCI at Queen's University's School of Computing. Working with him are a number of graduate and undergraduate students in engineering, design and psychology.

About Human Centered Computing at Aalborg University

The Human-Centered Computing at Aalborg University is a research unit in the department of computer science providing research and teaching in Interaction Design (IxD) and Human-Computer Interaction (HCI). Since 1974, Aalborg University (AAU) has provided knowledge, development and highly qualified graduates to the outside world. More than 20,000 students are enrolled at Aalborg University and more than 3,500 staff members are employed across the University’s three campuses in Aalborg, Esbjerg and Copenhagen. For more information about the research unit, please visit http://www.cs.aau.dk/research/human-centered-computing