Queen’s University’s Human Media Lab to unveil world’s first flexible lightfield-enabled smartphone.

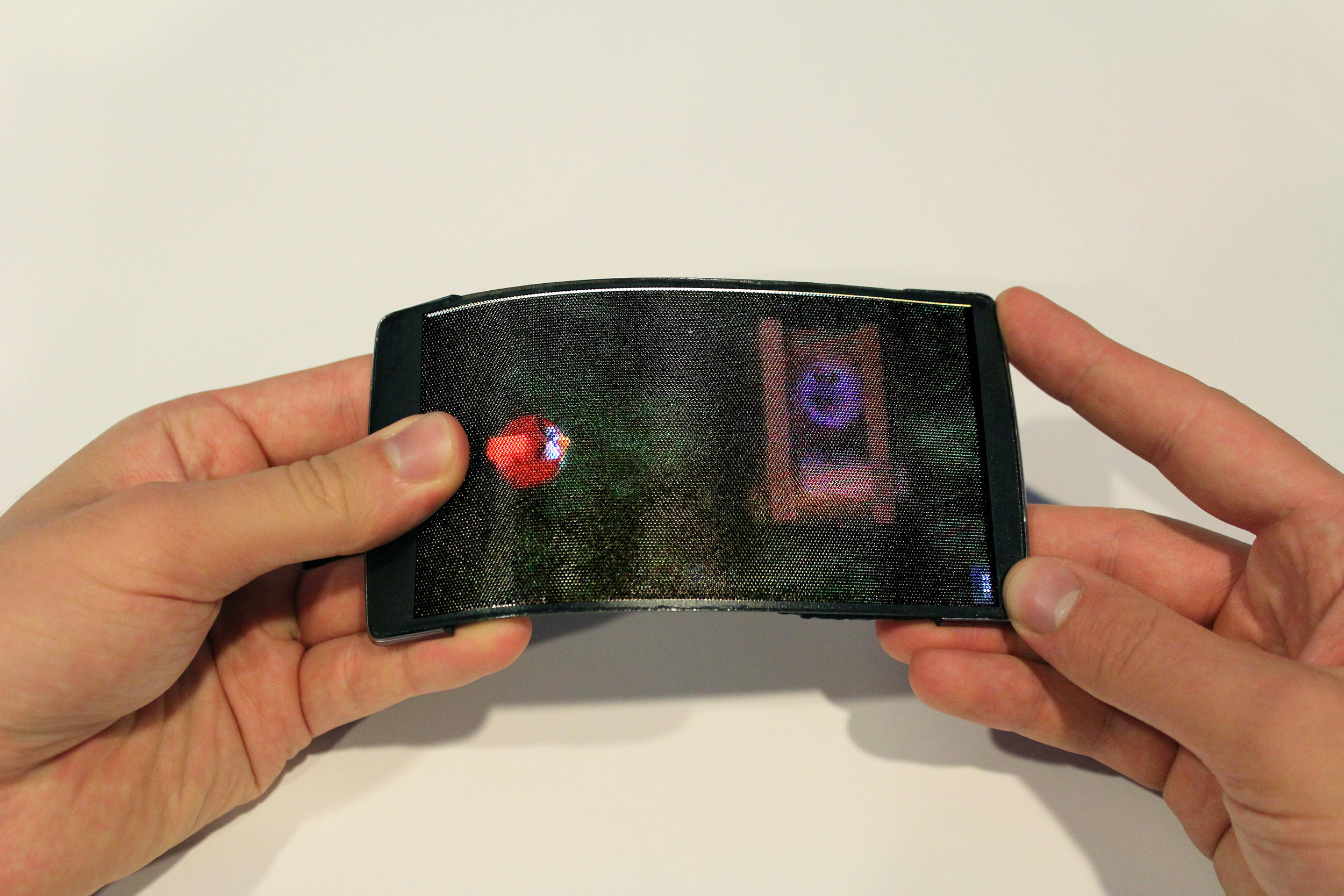

KINGSTON - Researchers at the Human Media Lab at Queen’s University have developed the world’s first holographic flexible smartphone. The device, dubbed HoloFlex, is capable of rendering 3D images with motion parallax and stereoscopy to multiple simultaneous users without head tracking or glasses.

“HoloFlex offers a completely new way of interacting with your smartphone. It allows for glasses-free interactions with 3D video and images in a way that does not encumber the user.” says Dr. Vertegaal.

HoloFlex features a 1920x1080 full high-definition Flexible Organic Light Emitting Diode (FOLED) touchscreen display. Images are rendered into 12-pixel wide circular blocks rendering the full view of the 3D object from a particular viewpoint. These pixel blocks project through a 3D printed flexible microlens array consisting of over 16,000 fisheye lenses. The resulting 160 x 104 resolution image allows users to inspect a 3D object from any angle simply by rotating the phone.

Building on the success of the ReFlex flexible smartphone, HoloFlex is also equipped with a bend sensor, which allows for the user to bend the phone as a means of moving objects along the z-axis of the display. HoloFlex is powered by a 1.5 GHz Qualcomm Snapdragon 810 processor and 2 GB of memory. The board runs Android 5.1 and includes an Adreno 430 GPU supporting OpenGL 3.1.

Dr. Vertegaal envisions a number of applications for the new functionality of the HoloFlex technology. A first application is the use of bend gestures for Z-Input to facilitate the editing of 3D models, for example, when 3D printing. Using the touchscreen, a user can swipe to manipulate objects in the x and y axes, while squeezing the display to move objects along the z-axis. Due to the wide view angle, multiple users can examine a 3D model simultaneously from different points of view.

“By employing a depth camera, users can also perform holographic video conferences with one another”, says Dr. Vertegaal. “When bending the display users literally pop out of the screen and can even look around each other, with their faces rendered correctly from any angle to any onlooker”.

HoloFlex also can be used for holographic gaming. In a game such as Angry Birds, for example, users would be able to bend the side of the display to pull the elastic rubber band that propels the bird. When the bird flies across the screen, the holographic display makes the bird literally pop out of the screen in the third dimension.

Queen’s researchers will unveil HoloFlex in San Jose, California at the top conference in Human-Computer Interaction, ACM CHI 2016, on Monday May 9th.

This research was support by Immersion Canada Inc. and the Natural Sciences and Engineering Research Council of Canada (NSERC).

Media Footage

High resolution photographs of HoloFlex are available rights-free by clicking the thumbnails below. Please include a photo credit to Human Media Lab.

References

Gotsch, D., Zhang, X., Burstyn, J. and Vertegaal, R. HoloFlex: A Flexible Holographic Smartphone with Bend Input. In Extended Abstracts of ACM CHI’16 Conference on Human Factors in Computing Systems. ACM Press, 2016.

About the Human Media Lab

The Human Media Lab (HML) at Queen’s University is one of Canada's premier Human-Computer Interaction (HCI) laboratories. Inventions include ubiquitous eye tracking sensors, eye tracking TVs and cellphones, PaperPhone, the world’s first flexible phone, PaperTab, the world’s first flexible iPad and TeleHuman, the world’s first pseudo-holographic teleconferencing system. HML is directed by Dr. Roel Vertegaal, Professor of HCI at Queen's University's School of Computing, as well as a number of graduate and undergraduate students with computing, design, psychology and engineering backgrounds.

Contact

Chris Armes

Communications Officer, Media Relations

613-533-6000 ext. 77513

chris.armes@queensu.ca